Glibc Devel 2 2 90 12 Rpm 12

Build Your Own Oracle RAC 11 g Cluster on Oracle Linux and iSCSI by Jeffrey Hunter Learn how to set up and configure an Oracle RAC 11 g Release 2 development cluster on Oracle Linux for less than US$2,700. The information in this guide is not validated by Oracle, is not supported by Oracle, and should only be used at your own risk; it is for educational purposes only. Updated November 2009 Contents • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • • Downloads for this guide: (Available for x86 and x86_64) (Available for x86 and x86_64) ( -OR- ) ( -OR- ) 1. Introduction One of the most efficient ways to become familiar with Oracle Real Application Clusters (RAC) 11 g technology is to have access to an actual Oracle RAC 11 g cluster. There's no better way to understand its benefits—including fault tolerance, security, load balancing, and scalability—than to experience them directly. Unfortunately, for many shops, the price of the hardware required for a typical production RAC configuration makes this goal impossible. A small two-node cluster can cost from US$10,000 to well over US$20,000.

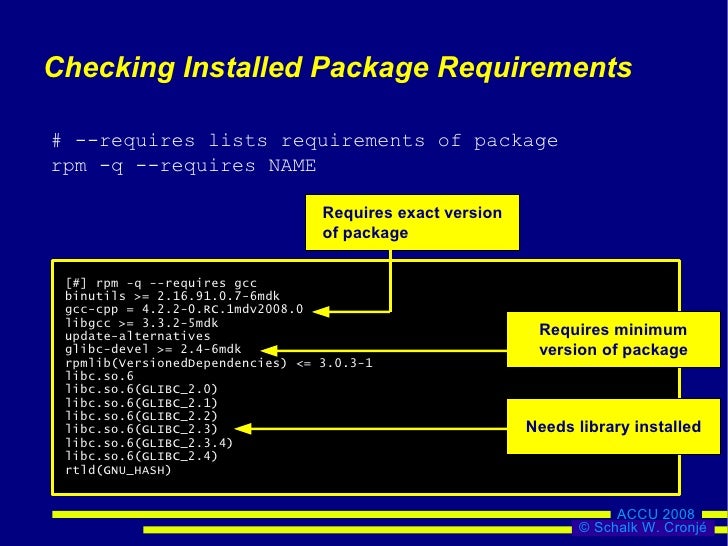

Each RPM includes headers that list any other RPMs that are required to make it work. For example, when I tried to install the GNU C Compiler (gcc) on Fedora Core 3, I got the following message: # rpm -i gcc-3.4.2-6.fc3.i386.rpm error: Failed dependencies: glibc-devel >= 2.2.90-12 is needed by gcc-3.4.2-6.fc3.i386. Autofs5-5.0.1-0.rc2.106.el4_8.4.x86_64.rpm, 21-Jul-2010 09:43, 826K. [ ], autofs5-5.0.1-0.rc2.114.x86_64.rpm, 18-Feb-2011 06:26, 826K. [ ], bash-3.0-21.el4_8.2.x86_64.rpm, 25-Nov-2009 06:36, 1.7M. [ ], bash-3.0-27.el4.x86_64.rpm, 18-Feb-2011 06:26, 1.7M. [ ], bind-9.2.4-30.el4_8.4.x86_64.rpm, 29-Jul-2009 12:32.

This cost would not even include the heart of a production RAC environment, the shared storage. In most cases, this would be a Storage Area Network (SAN), which generally start at US$10,000. For those who want to become familiar with Oracle RAC 11 g without a major cash outlay, this guide provides a low-cost alternative to configuring an Oracle RAC 11 g Release 2 system using commercial off-the-shelf components and downloadable software at an estimated cost of US$2,200 to US$2,700. The system will consist of a two node cluster, both running Oracle Enterprise Linux (OEL) Release 5 Update 4 for x86_64, Oracle RAC 11 g Release 2 for Linux x86_64, and ASMLib 2.0. All shared disk storage for Oracle RAC will be based on using Openfiler release 2.3 x86_64 running on a third node (known in this article as the Network Storage Server). Download Quickbooks Cash Register Plus 2010 R4 Free Software.

Although this article should work with Red Hat Enterprise Linux, Oracle Enterprise Linux (available for free) will provide the same if not better stability and will already include the ASMLib software packages (with the exception of the ASMLib userspace libraries which is a separate download). This guide is provided for educational purposes only, so the setup is kept simple to demonstrate ideas and concepts. For example, the shared Oracle Clusterware files (OCR and voting files) and all physical database files in this article will be set up on only one physical disk, while in practice that should be configured on multiple physical drives. In addition, each Linux node will only be configured with two network interfaces one for the public network ( eth0) and one that will be used for both the Oracle RAC private interconnect 'and' the network storage server for shared iSCSI access ( eth1). For a production RAC implementation, the private interconnect should be at least Gigabit (or more) with redundant paths and 'only' be used by Oracle to transfer Cluster Manager and Cache Fusion related data. A third dedicated network interface ( eth2, for example) should be configured on another redundant Gigabit network for access to the network storage server (Openfiler).

Oracle Documentation While this guide provides detailed instructions for successfully installing a complete Oracle RAC 11 g system, it is by no means a substitute for the official Oracle documentation (see list below). In addition to this guide, users should also consult the following Oracle documents to gain a full understanding of alternative configuration options, installation, and administration with Oracle RAC 11 g. Oracle's official documentation site is.

• - 11g Release 2 (11.2) for Linux • - 11g Release 2 (11.2) • - 11g Release 2 (11.2) for Linux and UNIX • - 11g Release 2 (11.2) • - 11g Release 2 (11.2) • - 11g Release 2 (11.2) Network Storage Server Powered by, is a free browser-based network storage management utility that delivers file-based Network Attached Storage (NAS) and block-based Storage Area Networking (SAN) in a single framework. The entire software stack interfaces with open source applications such as Apache, Samba, LVM2, ext3, Linux NFS and iSCSI Enterprise Target. Openfiler combines these ubiquitous technologies into a small, easy to manage solution fronted by a powerful web-based management interface. Openfiler supports CIFS, NFS, HTTP/DAV, FTP, however, we will only be making use of its iSCSI capabilities to implement an inexpensive SAN for the shared storage components required by Oracle RAC 11 g.

The operating system and Openfiler application will be installed on one internal SATA disk. A second internal 73GB 15K SCSI hard disk will be configured as a single 'Volume Group' that will be used for all shared disk storage requirements. The Openfiler server will be configured to use this volume group for iSCSI based storage and will be used in our Oracle RAC 11 g configuration to store the shared files required by Oracle grid infrastructure and the Oracle RAC database. Oracle Grid Infrastructure 11 g Release 2 (11.2) With Oracle grid infrastructure 11 g Release 2 (11.2), the Automatic Storage Management (ASM) and Oracle Clusterware software is packaged together in a single binary distribution and installed into a single home directory, which is referred to as the Grid Infrastructure home.

You must install the grid infrastructure in order to use Oracle RAC 11 g Release 2. Configuration assistants start after the installer interview process that configure ASM and Oracle Clusterware.

While the installation of the combined products is called Oracle grid infrastructure, Oracle Clusterware and Automatic Storage Manager remain separate products. After Oracle grid infrastructure is installed and configured on both nodes in the cluster, the next step will be to install the Oracle RAC software on both Oracle RAC nodes. In this article, the Oracle grid infrastructure and Oracle RAC software will be installed on both nodes using the optional Job Role Separation configuration. One OS user will be created to own each Oracle software product ' grid' for the Oracle grid infrastructure owner and ' oracle' for the Oracle RAC software. Throughout this article, a user created to own the Oracle grid infrastructure binaries is called the grid user.

This user will own both the Oracle Clusterware and Oracle Automatic Storage Management binaries. The user created to own the Oracle database binaries (Oracle RAC) will be called the oracle user. Both Oracle software owners must have the Oracle Inventory group ( oinstall) as their primary group, so that each Oracle software installation owner can write to the central inventory (oraInventory), and so that OCR and Oracle Clusterware resource permissions are set correctly. The Oracle RAC software owner must also have the OSDBA group and the optional OSOPER group as secondary groups. Automatic Storage Management and Oracle Clusterware Files As previously mentioned, Automatic Storage Management (ASM) is now fully integrated with Oracle Clusterware in the Oracle grid infrastructure. Oracle ASM and Oracle Database 11 g Release 2 provide a more enhanced storage solution from previous releases. Part of this solution is the ability to store the Oracle Clusterware files; namely the Oracle Cluster Registry (OCR) and the Voting Files (VF also known as the Voting Disks) on ASM.

This feature enables ASM to provide a unified storage solution, storing all the data for the clusterware and the database, without the need for third-party volume managers or cluster file systems. Archicad Keyboard Shortcuts Pdf Writer. Just like database files, Oracle Clusterware files are stored in an ASM disk group and therefore utilize the ASM disk group configuration with respect to redundancy.

For example, a Normal Redundancy ASM disk group will hold a two-way-mirrored OCR. A failure of one disk in the disk group will not prevent access to the OCR. With a High Redundancy ASM disk group (three-way-mirrored), two independent disks can fail without impacting access to the OCR. With External Redundancy, no protection is provided by Oracle. Oracle only allows one OCR per disk group in order to protect against physical disk failures. When configuring Oracle Clusterware files on a production system, Oracle recommends using either normal or high redundancy ASM disk groups.

If disk mirroring is already occurring at either the OS or hardware level, you can use external redundancy. The Voting Files are managed in a similar way to the OCR. They follow the ASM disk group configuration with respect to redundancy, but are not managed as normal ASM files in the disk group. Instead, each voting disk is placed on a specific disk in the disk group. The disk and the location of the Voting Files on the disks are stored internally within Oracle Clusterware. The following example describes how the Oracle Clusterware files are stored in ASM after installing Oracle grid infrastructure using this guide.

To view the OCR, use ASMCMD: [grid@racnode1 ~]$ asmcmd ASMCMD>ls -l +CRS/racnode-cluster/OCRFILE Type Redund Striped Time Sys Name OCRFILE UNPROT COARSE NOV 22 12:00:00 Y REGISTRY.253 +CRS/racnode-cluster/OCRFILE REGISTRY.253 crsctl query css votedisk [grid@racnode1 ~]$ crsctl query css votedisk ## STATE File Universal Id File Name Disk group -- ----- ----------------- --------- --------- 1. ONLINE 4cbbd0de4c694f50bfd3857ebd8ad8c4 (ORCL:CRSVOL1) [CRS] Located 1 voting disk(s). If you decide against using ASM for the OCR and voting disk files, Oracle Clusterware still allows these files to be stored on a cluster file system like Oracle Cluster File System release 2 (OCFS2) or a NFS system. Please note that installing Oracle Clusterware files on raw or block devices is no longer supported, unless an existing system is being upgraded.

Previous versions of this guide used OCFS2 for storing the OCR and voting disk files. This guide will store the OCR and voting disk files on ASM in an ASM disk group named +CRS using external redundancy which is one OCR location and one voting disk location. The ASM disk group should be be created on shared storage and be at least 2GB in size. The Oracle physical database files (data, online redo logs, control files, archived redo logs) will be installed on ASM in an ASM disk group named +RACDB_DATA while the Fast Recovery Area will be created in a separate ASM disk group named +FRA.